How Notion stores the data and scale to millions of users?

The Monolith's Last Stand: A Tale of Shards and Scaling...

Introduction

Notion is a widely popular productivity platform used by millions of users worldwide, managing an immense volume of data including notes, documents, tasks, and collaboration workspaces. As its user base and workload have grown exponentially, Notion has faced significant challenges in maintaining smooth performance and scalability. Handling such a scale requires robust data storage and efficient database management strategies to support rapid growth without compromising reliability.

In this article, we will explore how Notion stores its data and the techniques it employs to scale its database infrastructure effectively, including its transition from a monolithic PostgreSQL setup to a sophisticated sharded architecture.

The data model behind Notion's flexibility

Notion stores data at most granular level i.e. in blocks. So, what is a block. It’s the smallest unit which is sensible enough to store independently. For ex. an item in a todo list is stored independently. It’s good because if you have to branch out the todo item further, it will be quite easier. Notions stores data in below format in their database-

Few things about this structure-

type - helps in render accordingly on frontend. Secondly, it helps in transformation from one type to another. For ex. someone can transform a todo item to a heading. With type “changeTo” operation becomes simpler to implement.

properties - As the name suggests, it stores the properties based on the type of the block.

content - reference to child blocks in order.

parent - is a backlink to immediate ancestor in the hierarchy. It helps in evaluating access permissions for a child block. Notion traverses to the root workspace and figures out if a client is authorized to access this specific block.

How data is stored and retrieved?

After reading the Notion’s post, the way their servers handles with data looks like operation transform is used which is a very popular strategy to handle concurrent updates in collaborative editors like google docs.

How Notion handled the growing data?

Notion started with a monolithic PostgreSQL database, but soon ran into trouble as usage grew. Their oncalls had to constantly stay alert because of unpredictable CPU spikes. The main headache was the Postgres VACUUM process stalling, which stopped the database from reclaiming disk space from dead data. Another key issue was transaction ID wraparound—basically, the database running out of available transaction IDs.

Facing these scaling issues, Notion decided to shard their database. Sharding means horizontally scaling by splitting data across multiple machines. Vertical scaling has its limits; hardware eventually maxes out, and even Postgres can’t handle everything in one place at Notion’s growth rate.

First, they had to choose which tables to shard. Since Notion’s core data is blocks, any block-related table (like comments linked to blocks) needed to live together on the same machine to prevent expensive fetches across shards.

Next, the sharding key. They picked workspace ID—the parent for all articles, files, folders, etc.—so all data for blocks in the same workspace stays in one shard.

Migrating to shards was tricky, especially with a huge client base and worries about downtime. Notion handled it well, only needing a brief downtime. Their process: double writing to both the old and new DBs using audit logs and a catch script (more time refining the catch script could have further reduced downtime), backfilling old data once double writing started, and "dark reads" for verification (reading from both DBs but only using the sharded one for checks). For offline data checks, they wrote a script to randomly verify contents in both DBs. After this, they fully switched from the monolithic to the sharded database.

They also mentioned a reverse audit log they could use to roll back the migration if something unexpected went wrong.

Did sharding work for them?

To a good extent, yes. They still saw CPU spikes on some shards during high-load events like New Year and with increasing registrations. In response, they decided to reshard their database—going from 32 shards to 96 shards, tripling the count. What stood out to me wasn’t the resharding itself, but how they executed it.

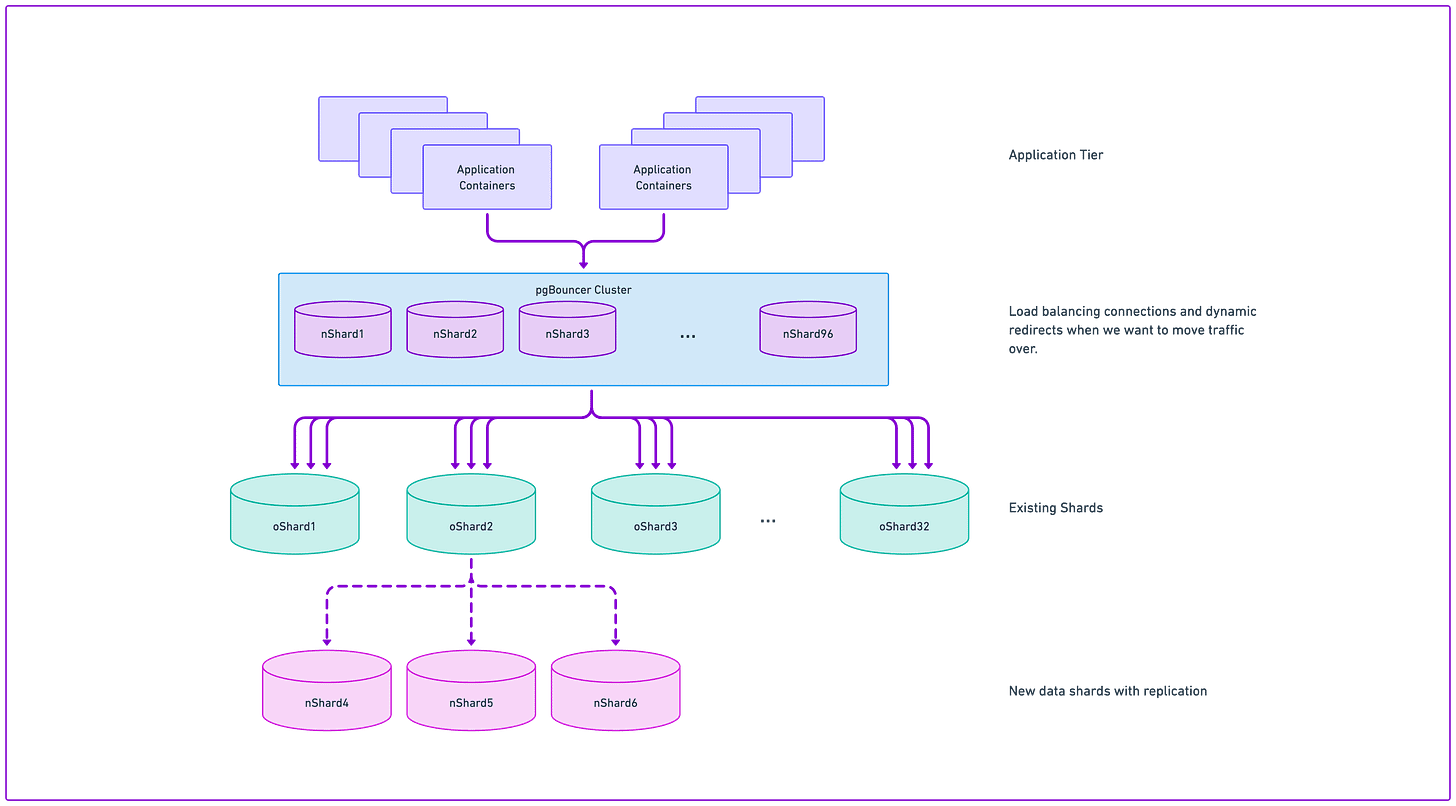

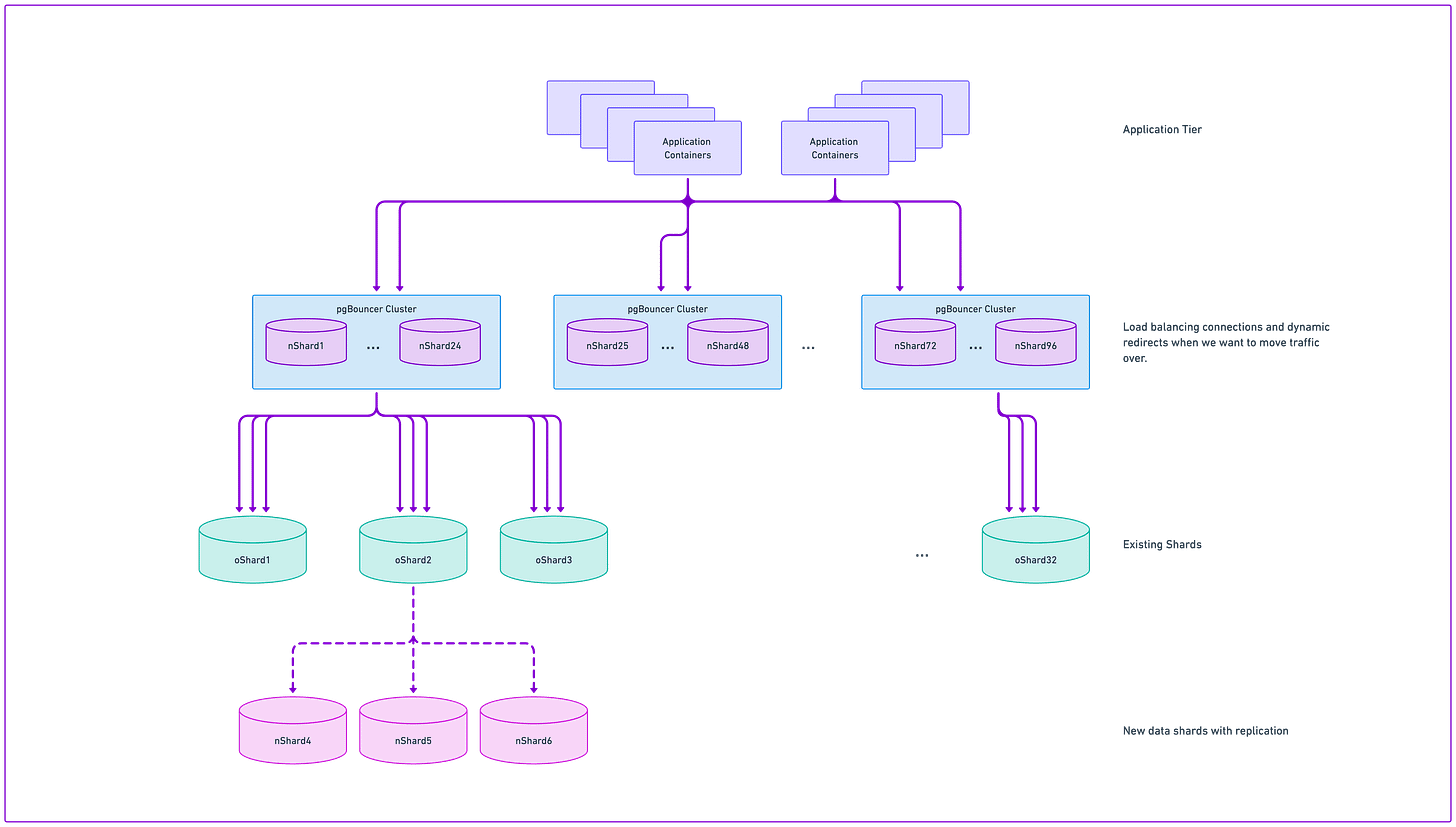

They used pgbouncer, a connection pooler/load balancer for the database shards. Their plan was to add entries for the 96 shards and initially redirect traffic back to the existing 32 shards, gradually migrating traffic to the new shards as they verified everything worked correctly.

The pgbouncer cluster had 100 instances, each opening 6 connections to each of the 32 shards—for a total of 600 connections per shard. If they kept things the same, mapping 96 shards onto 32 shards would mean tripling connections per original shard—1,800 connections each.

To avoid that, they split pgbouncer into four groups of 25 instances each (still 100 total). Each group manages either 24 new shards or 8 old shards. They decided on 8 connections per new shard, which translates to 24 connections per old shard (8 × 3) and, with 25 instances, totals 600 connections per old shard—maintaining the original connection load.

I know this is a lot to take in. If you're looking for more details or a deeper explanation, I'd recommend checking out the third article in the references.

Conclusion

Notion’s sharding story is a great reminder that technical bottlenecks are inevitable as you scale, but how you respond makes all the difference. By moving quickly to partition their data, keeping related information together using smart shard keys, and layering in techniques like double writes, dark reads, backfills, and careful connection management, they pulled off a challenging migration with surprisingly little disruption. It wasn’t just about fixing immediate issues—they kept iterating, even re-sharding when growth demanded it, showing that database strategies need to evolve with your product. The key takeaway: solid engineering and adaptable data design are essential to keeping up with increasing demands.